Do You Have the Right Computer/Phone/Headset to Develop for AR and VR on Oculus, Android, or iOS? One Chart and Seven User-Stories that will make it all Clear…

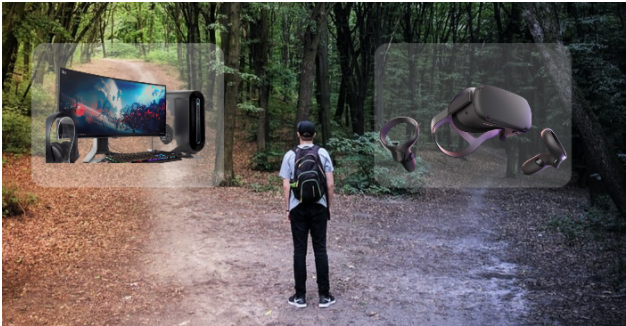

So, you want to Develop an Application for VR or AR with Unity…

We guess that you might have a few questions:

- What about my computer? Is it powerful enough?

- What about my VR Headset? (Will it work with the computer I have? Does it need a special cord?)

- What about my Phone? (Does it support Augmented Reality? What if I have an iPhone?, etc.)

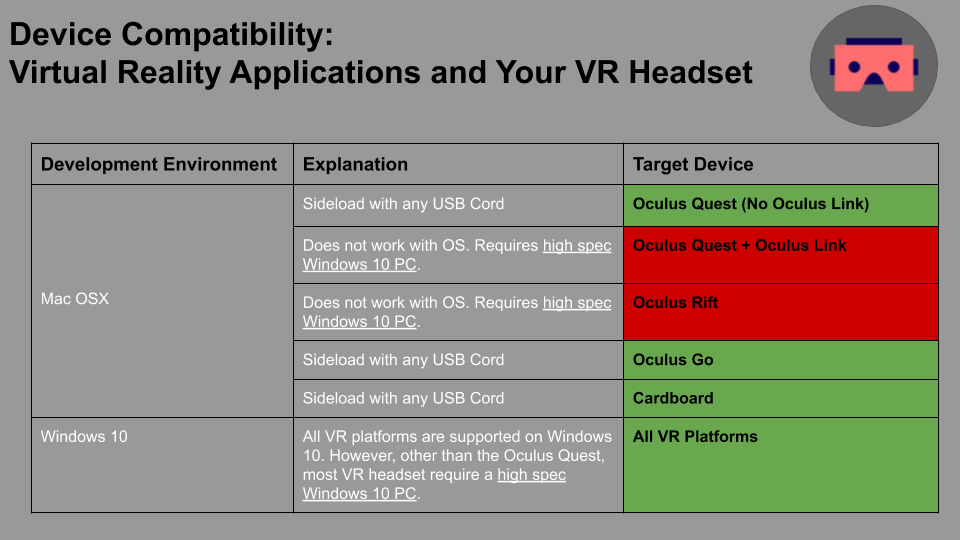

Here is a chart to help you navigate, followed by a few user-stories that will help you understand if you do (or don’t) have the necessary Hardware to create XR Software.

VR

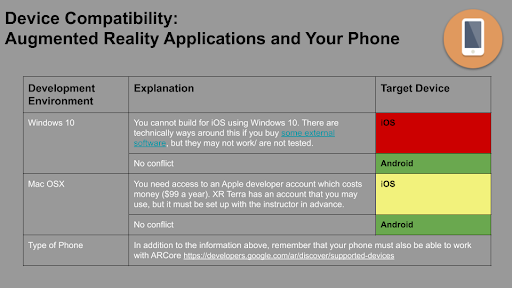

AR

Carol (Mac > Quest) ????

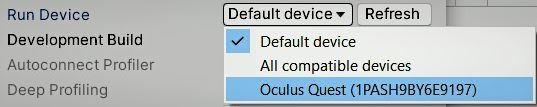

Carol wants to develop an app for the Oculus Quest (She wants to build a virtual “Field of Bunnies” experience for her aunt who loves bunnies.), and her computer is a Macbook Pro (laptop).

What she has seen from videos of people developing XR apps is the ability to make a change in the Unity editor, hit save, throw her VR headset on, and be able immediately view the changes from within VR.

Unfortunately, as she quickly learns, the ability to do live editing like this requires more than just plugging a cord into her computer:

- It requires the Oculus Link Software along with her USB cord.

- The Oculus Link Software will not run on a Mac.

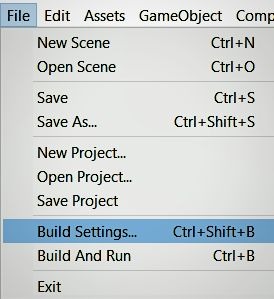

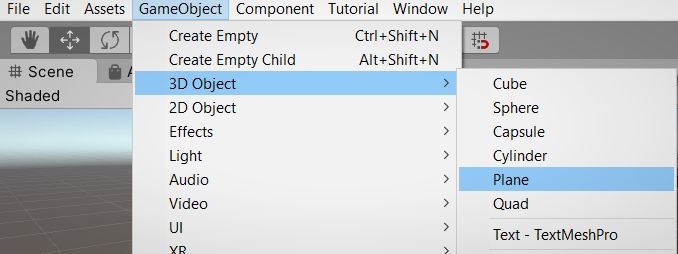

That means Carol’s only real option, other than buying a high-spec Windows 10 PC, is to develop her game on the MacBook… Then, she will need to ‘Build and Run the project each time she wants to see what her changes in Unity have done to the VR game. Alas, the “field of Bunnies” will take a little longer to properly test before she shows the experience to her aunt.

Arnie (Mac > Oculus Rift) ????

Arnie is researching VR headsets, and decides they like the Oculus Rift S headset best. Their next step is to look into what kind of computer they’ll need to run it.

To their dismay, Arnie’s only computer is a Mac desktop, which will not run Oculus Rift software. They then must debate the merits of buying a Windows machine to run VR or settling for a wireless headset like the Oculus Quest, which has a more limited selection of games to play.

- After hitting this roadblock, Arnie decides they will invest in a high-spec, ‘VR-Ready’ Windows 10 laptop. They find that not only can they use the Oculus Rift, but they can use pretty much any VR headset they want.

- Also, if they decided to use the Oculus Link cable with the Quest 1 they could run any VR experience they could on a Rift. In fact, if they use the Quest 2 with Oculus Link, they could run VR experiences that are even more demanding.

Joshua (Windows Computer > Oculus Go) ????

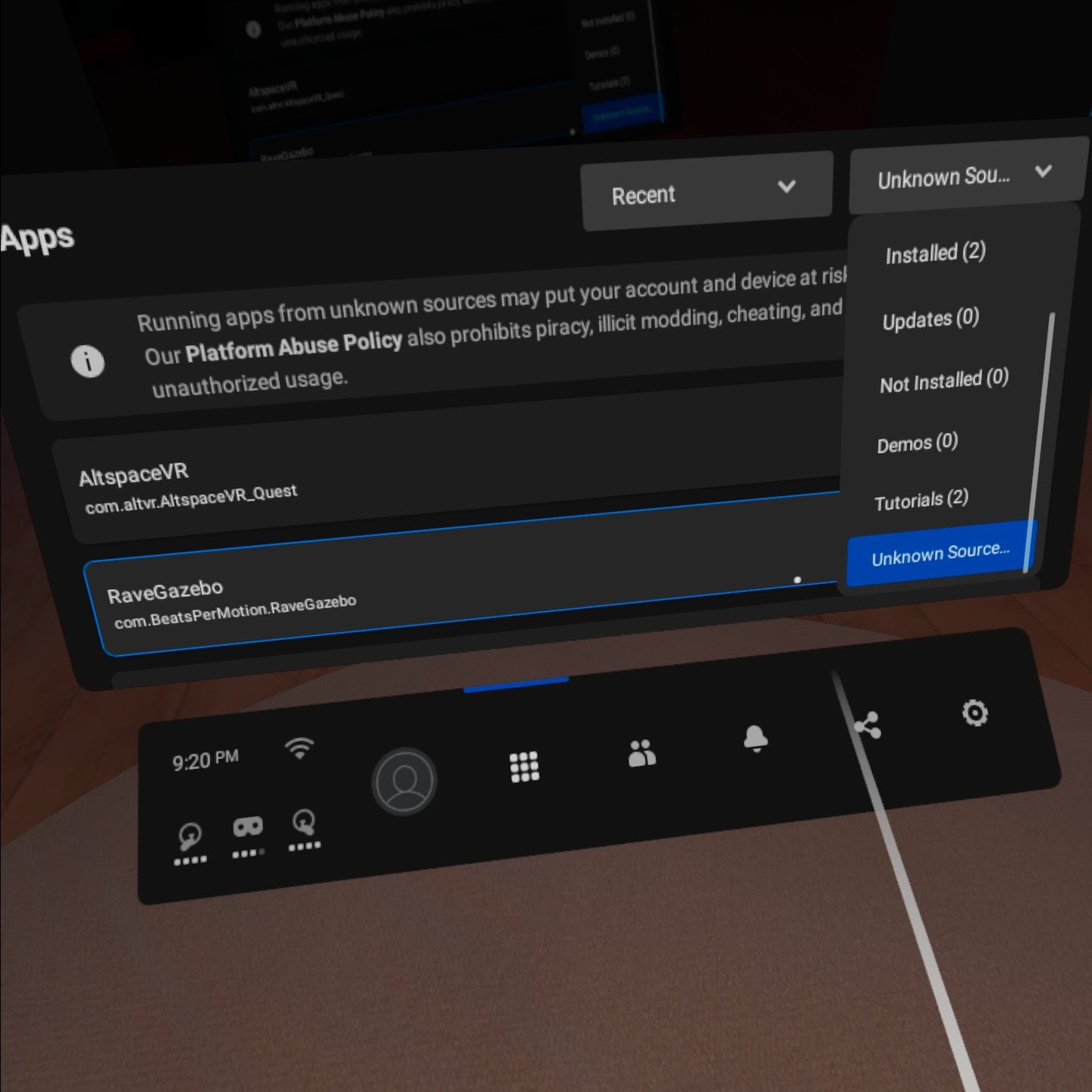

Joshua got an Oculus Go a year ago and has been using it to watch 360 videos on YouTube. Having been inspired by the cool art, he decides to make his own 360 content using Unity on his Macbook. He follows the instructions found on Oculus’s own Developer Blog to set up his Unity project. He uses the same cable he uses to charge the Go to plug it into his laptop and sideloads a quick 5 second test build from Unity.

Are You interested in learning more about XR development? We are currently accepting applications…

Cameron (Windows or Mac > Google Cardboard) ????

Cameron is trying to make VR content on a low budget, so they get a Google Cardboard for their phone. After turning on developer mode on their android phone they are able to connect to their computer and sideload a build from Unity with the Carboard package installed in order to test it.

Cameron (Mac or Windows > AR on ARCore-Compatible-Android Phone) ????

After being a little disappointed with the limitations of developing a Google Cardboard experience, Cameron decides to transition to Augmented Reality development.

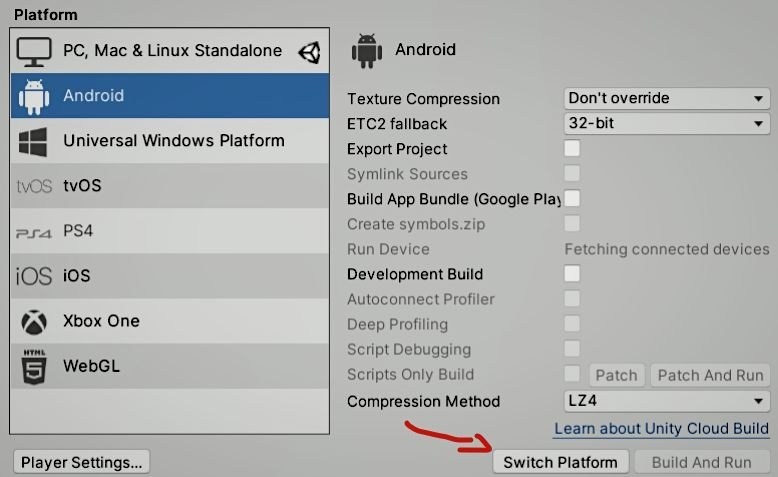

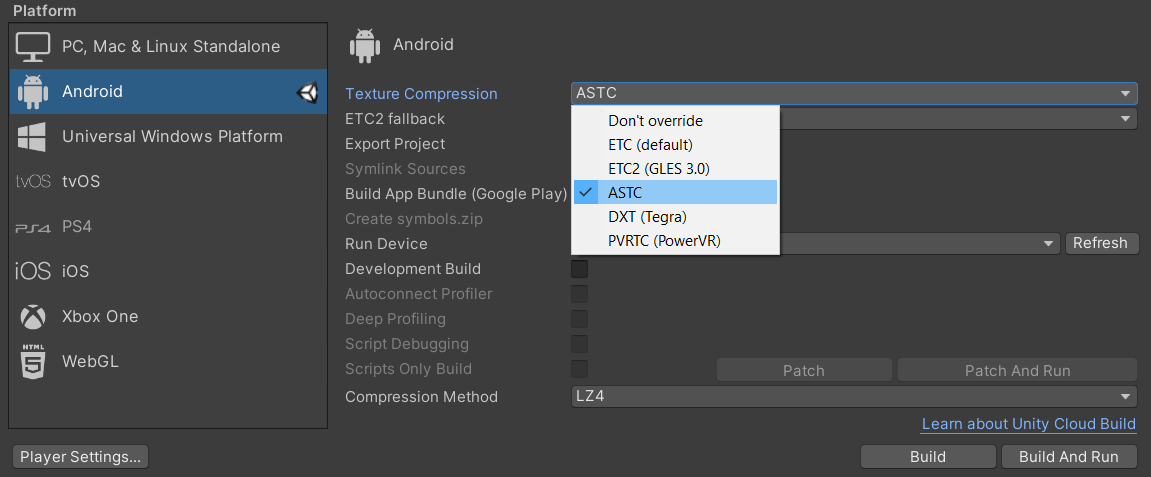

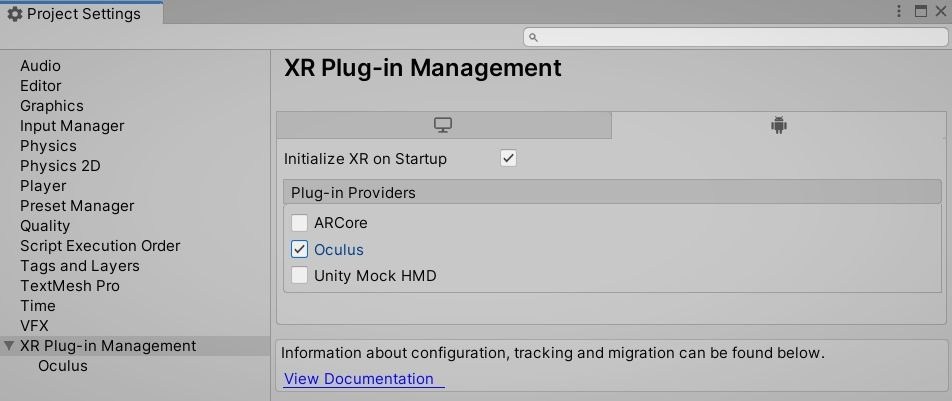

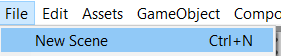

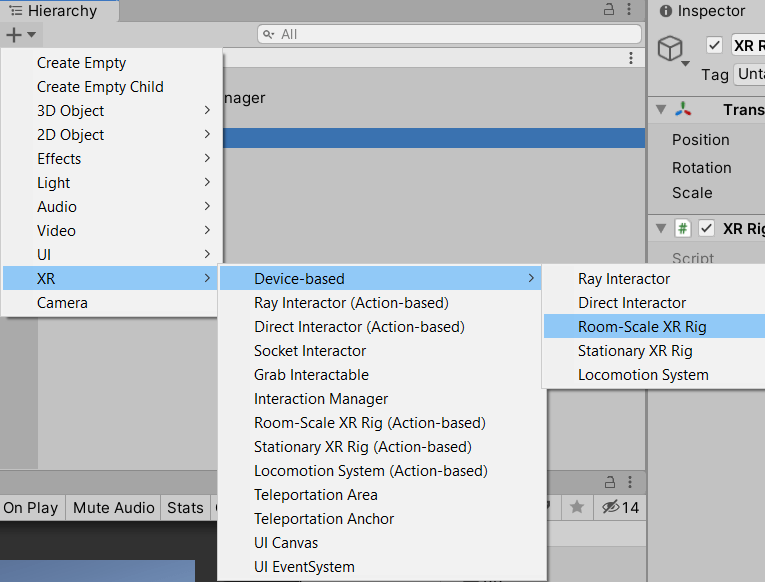

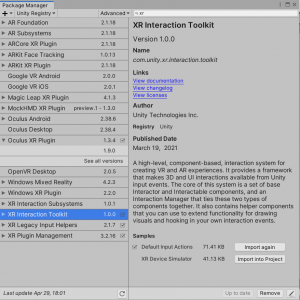

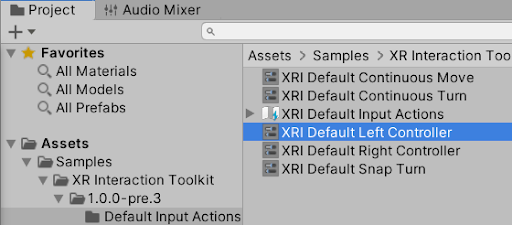

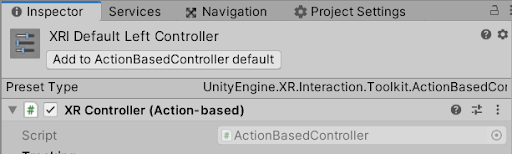

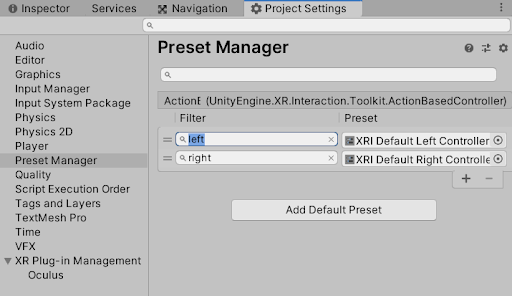

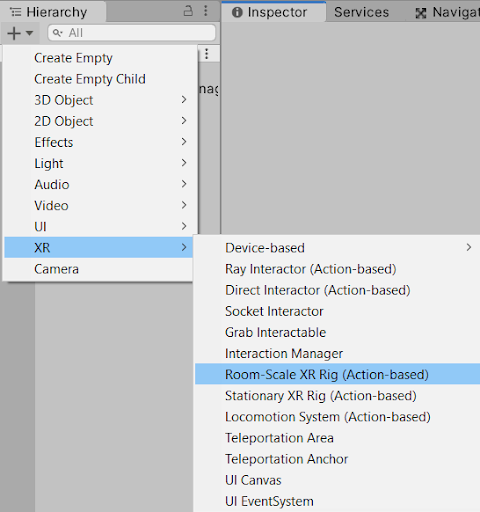

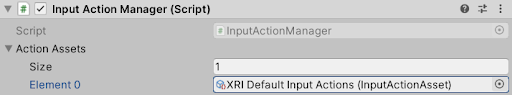

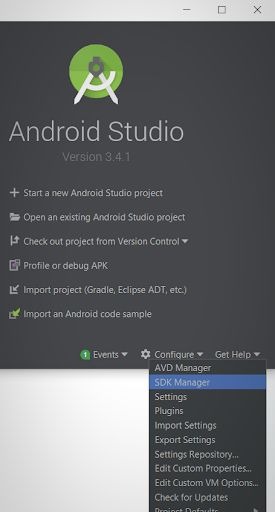

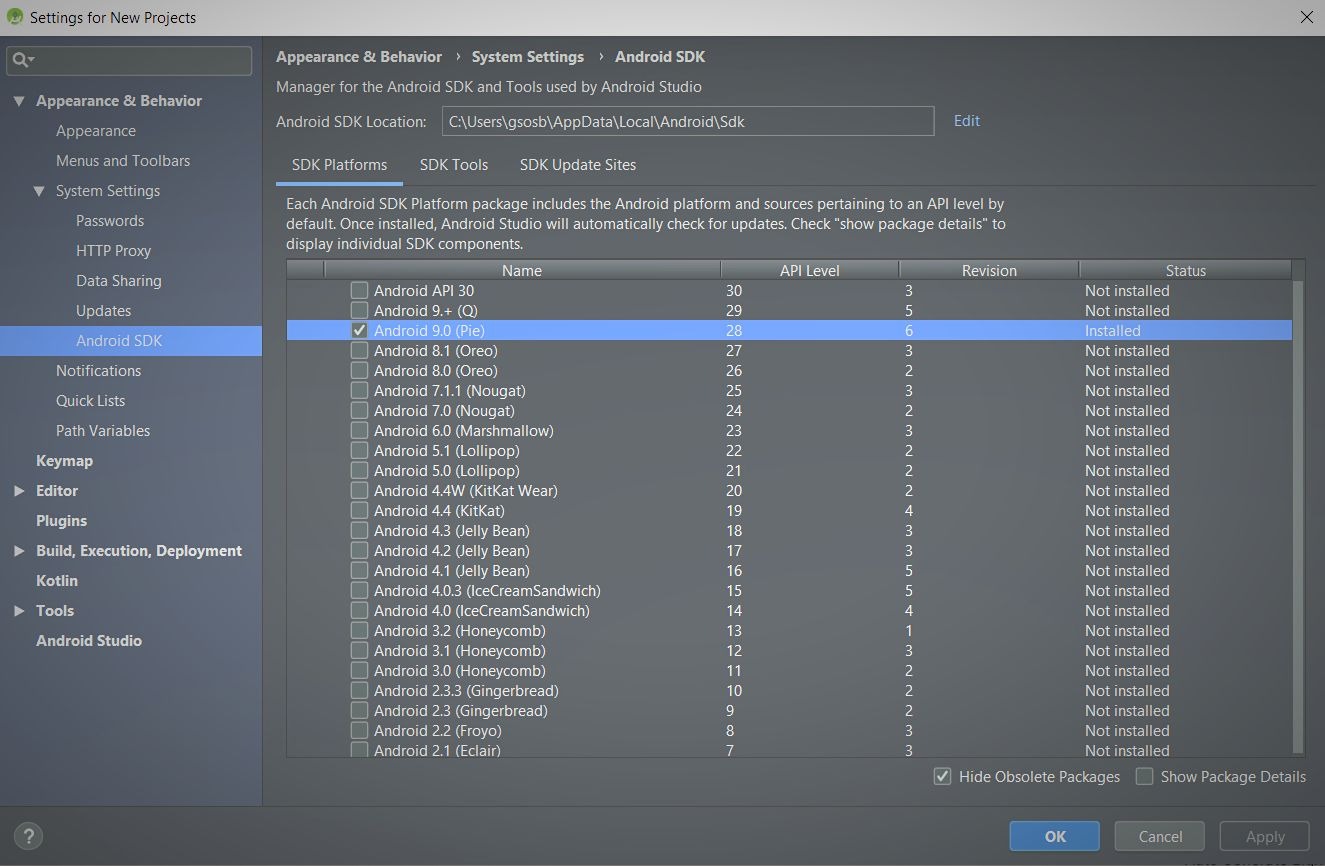

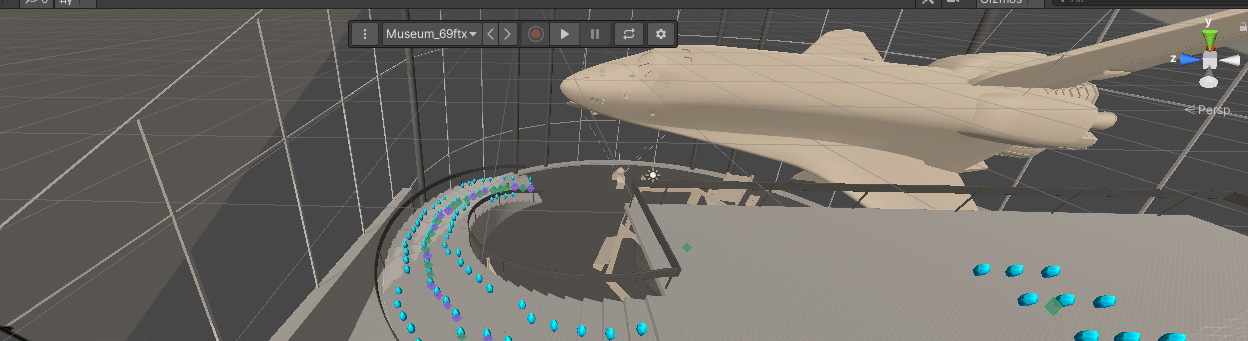

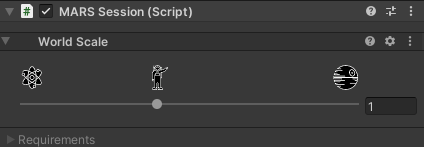

After checking if their phone is compatible with ARCore and keeping developer mode on their android phone, they create a Unity project with the XR Interaction Toolkit and enable ARCore in their build. They’re now ready to develop AR on their android phone from any type of computer, either Windows or Mac.

Cameron (Windows> AR on iPhone) ????

Cameron has achieved some success developing AR for their Android phone, and so decides to expand their most recent app onto iOS devices. Unfortunately, their laptop won’t cut it. They can’t use their Windows machine for iOS development.

Cameron (Mac > AR on iOS) ????

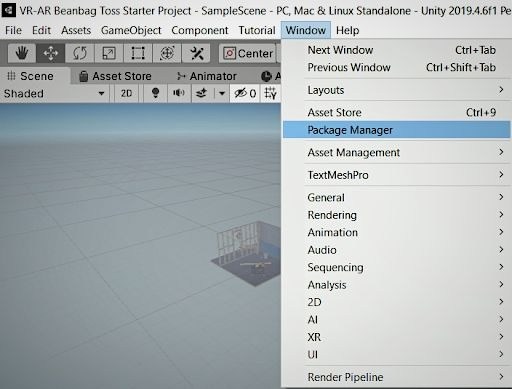

So, Cameron invests in a Macbook laptop, and borrows their friend’s iPad after finding it on the list of ARCore compatible phones. They pay $99 for the Apple developer account and download XCode to be able to load their app onto the iPad. They import the ARKit package from Unity’s Package Manager, and now they can successfully port their app to iOS.

Closing:

Hope these stories have helped your XR dev journey’s beginning a little clearer. Are You interested in learning more about XR development? We are currently accepting applications…

Also, if you have questions about this article, please send them our way.

XR Terra Team